Fourier transforms#

Fourier transforms are mathematical operations which when acted on a function, decomose it to its constituent frequencies. In the Fourier Series course, we have shown that a periodic function can be expressed as an infinite sum of sine and cosine functions. We have also shown that, through scaling laws, we can extend the period of the function to an arbitrary length. If the highest frequency in the Fourier Series is kept the same and we keep extending the period of the function, the sum will become longer and longer. In the limit where the period is expanded to infinity, the sum will become an integral, resulting to the definition of the Fourier Transform.

The Fourier Transform of a function \(f(t)\) to a new function \(F(\omega)\) is defined as

Using this definition, \(f(t)\) is given by the Inverse Fourier Transform

Using these 2 expressions we can write

This is known as Fourier’s Integral Theorem. This proves that any function can be represented as an infinite sum (integral) of sine and cosine functions, linking back to the Fourier Series.

Note that this definition of the Fourier Transform is not unique. There are many different conventions for the Fourier Transform, but we will stick with this one for this course.

Notation

We will represent the Fourier Transform operator using the calligraphic symbol \(\mathcal{F}[f(t)]\), such that

Using this notation, we will represent the Inverse Fourier transform operator as \(\mathcal{F}^{-1}[F(\omega)]\) such that

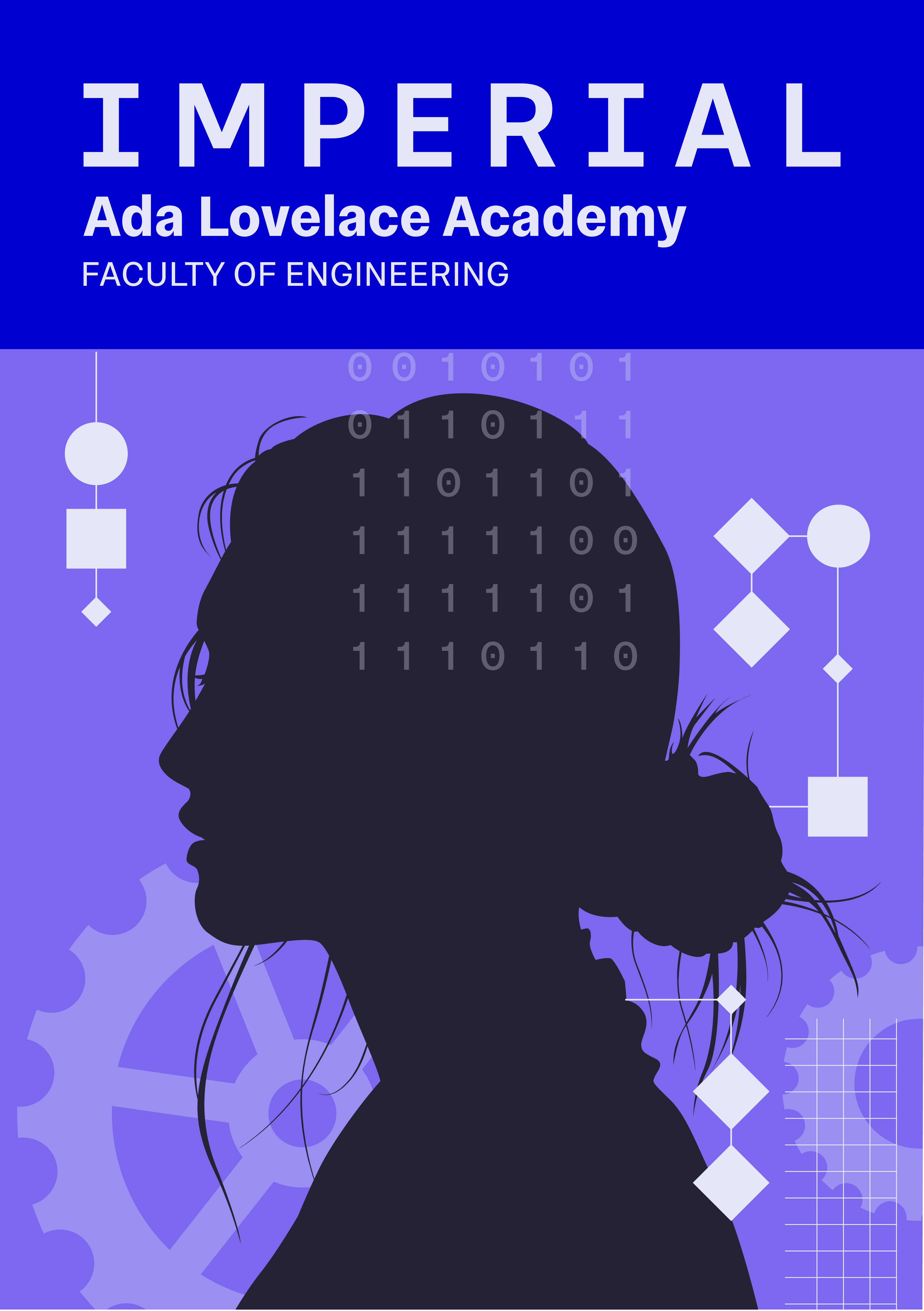

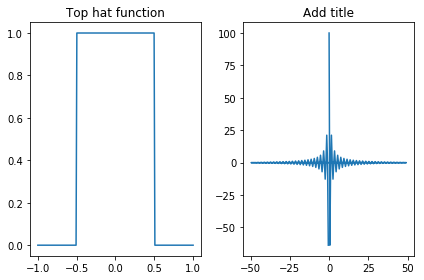

Lets look at an example, the top hat function defined as

With a Fourier Transform of

let’s plot the function and its Fourier Transform.

Special functions and their Fourier Transforms#

This section will focus on useful functions and their Fourier Transforms. We will look at the Delta and Gaussian functions.

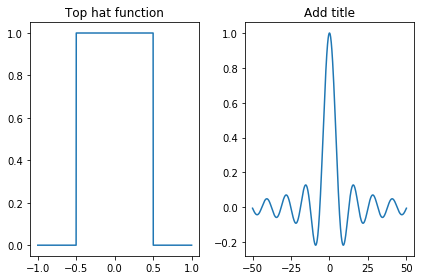

Delta function#

The delta function, \(\delta(t)\), is defined as

Carrying out the transform we see that Fourier Transform of the delta function is actually a constant, such that

Let’s plot the function and its transform.

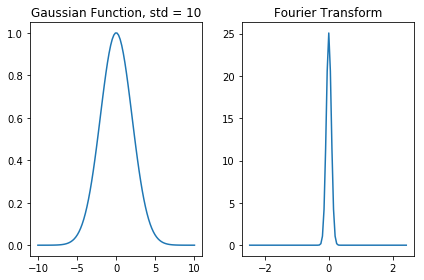

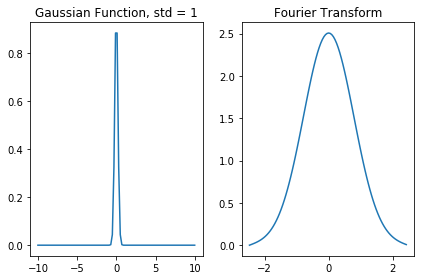

Gaussian function#

A Gaussian function is defined as

where \(\sigma\) is the standard devation of the Gaussian. The Fourier transform of a gaussian is another Gaussian such that

Thus, we can see that as the Gaussian function gets broader, its Fourier Transform gets narrower. To illustrate this, let’s plot the Gaussian and its transform for two different standard deviations.

Properties of Fourier Transforms#

When determining the Fourier Transform of a function, there are a number of properties that can make our calculations easier or even allow us to identify the transform of the function in question as an already known transform. Thus, being familiar with the properties of the Fourier Transforms can be of great use when considering the transforms of specific functions.

Even and odd functions#

In general, the Fourier Transform, \(F(\omega)\), of a function \(f(t)\) will be complex and thus can be written as

We can show that the real part of the transform, \(R(\omega)\), is related to the even part of the function and that the imaginary part of the transform, \(iI(\omega)\), is related to the odd part of the function.

Lets start with an even funtion, \(f(t)\), and determine its Fourier Transform.

Since \(f(t)\) is even, we can use \(f(t) = f(-t)\) resulting to

Because \(f(t)\) and \(\cos({\omega}t)\) are both even functions, we can write

Using a similar procedure, we can derive that for an odd function \(f(t)\) the Fourier Transform becomes

Thus, this proves that the Fourier transform of an even function, \(e(t)\), is real, while the Fourier Transform of an odd function, \(o(t)\), is imaginary. We can take this further, by considering each function, \(f(t)\), as a sum of an even and an odd function, such that \(f(t) = e(t) + o(t)\). As we stated earlier the Fourier transform of a function can be written as \(F(\omega) = R(\omega) + iI(\omega)\). Using these results we can show that

Linearity and superposition#

A Fourier Transform is linear, meaning that for a function \(f(t) = af_1(t) + bf_2(t)\) the Fourier Transform becomes

This can be easily proven by considering the definition of the Fourier Transform. Again, consider the Fourier Transform of \(f(t) = af_1(t) + bf_2(t)\):

Reciprocal broadening/scaling#

Stretching a function by a factor \(\alpha\), results in the Fourier Transform of the function to be compressed by the same factor. Consider the transform of a function, \(f({\alpha}t)\)

This shows that as the functions gets broader, its transform not only becomes narrower but due to the \(1\,/\,|\alpha|\) factor it also increases in amplitude.

Translation#

Shifting a function by a certain amount results in a phase shift on the Fourier Transform:

Derivatives and integrals#

Finding the transform of derivative simply translates to a multiplication of the original transform such that

To prove this we must differentiate the function, \(f(t)\), and take its transform

This can be indentified as the inverse Fourier Transform, such that

Thus, taking the Fourier Transform of \(f'(t)\) proves the above statement

We can generalise this result to higher order derivatives. For the \(nth\) derivative

This shows that differentiation magnifies high frequencies and shifts the phase of the transform by \({\pi}\,/\,{2}.\)

Using this result we can show how integration affects the transform of a function

Convolution#

When discussing the nature of Fourier Series and Transforms, one needs to discuss convolutions. Simply stated, a convolution of two (or more) functions is defined as the integral over all space of the product of the two desired functions after one has been reversed and shifted.

The convolution of two functions, \(a(t)\) and \(b(t)\) is denoted by \(a(t) * b(t)\) and defined as

where \(u\) is a dummy variable that dissapears when integrating. It should be noted that convolution is commutative, meaning that the ordering is not important.

Convolution is perharps one of the most important tools for a scientist of any discipline. This can be illustrated via a simple example. Imagine you have just made measurements of the magnitude of a magnetic field at a particular direction using a magnetometer. Those measurements have an inherent error due to the precision of the instrument you used. This error will lead to the smearing of your outcome distribution, or in other words the true distribution has been convolved with the error function. Therefore in order to recover the original (true) distrubution of your measurements you need to use the Convolution Theorem, detailed below.

The convolution theorem#

Let us examine what happens when we apply a Fourier Transform on a convolution of two functions.

where the splitting of the integrals comes from making the substitution \(s = t - u\) and then noting that \(u\) no longer appears on the inner integral. The final expression deduced above is known as The Convolution Theorem. It is one of the most important properties of Fourier Transfors which is evident as it the essence of Fourier Analysis. The Convolution Theorem states that the Fourier Transform of the convolution of two functions is equal to the product of the Fourier Transforms of each function. Looking at it in the opposite direction, the Fourier Transform of the product of two functions is given by the convolution of the Fourier Transform of those functions individually:

Going back to the problem we discussed at the beginning of the notebook, we can now utilise the Convolution Theorem to understand how to retrieve the true distribution from a set of data that has been convolved with an error function. Simply, apply a Fourier transform on the resulting convolved distribution and divide it by the Fourier Transform of the known error function. The result will be the Fourier Transform of your true distribution.

TODO: ADD AN EXAMPLE OF HOW CONVOLUTION CAN BE USED IN CODE?

References#

Material used in this notebook was based on the “Fourier Transforms” course by professor Carlo Contaldi provided by the Physics Department.